반응형

visdom이란?

데이터 시각화를 window창으로 보여주는 package

visdom 설치

!pip install visdom

!python -m visdom.server위 코드를 실행하면 http://localhost:8097/ 에 visdom 화면이 생성된다.

실습

import torch

import torch.nn as nn

import torchvision

import torchvision.datasets as dsetsimport visdom

import visdom

vis = visdom.Visdom()Text

vis.text("Hello. world", env = "main") ## env = main이라는 환경에 창을 띄움

image

a = torch.randn(3,200,200)

vis.image(a)

RGB가 random하게 있는 200x200이미지 출력

images

vis.images(torch.Tensor(3, 3, 28, 28))

28x28 3개이미지 동시 출력

example(using MNIST and CIFAR10)

MNIST = dsets.MNIST(root="./MNIST_data",

train = True,

transform=torchvision.transforms.ToTensor(),

download=True)

cifar10 = dsets.CIFAR10(root="./cifar10",

train = True,

transform=torchvision.transforms.ToTensor(),

download=True)CIFAR10

data = cifar10.__getitem__(0)

print(data[0].shape)

vis.images(data[0],env="main")

CIFAR10 첫번째 data 그림 출력(두꺼비)

MNIST

data = MNIST.__getitem__(0)

print(data[0].shape)

vis.images(data[0],env="main")

MNIST 첫번째 이미지 출력

Check dataset

## batch sise 만큼 가져오기

data_loader = torch.utils.data.DataLoader(dataset = MNIST,

batch_size = 32,

shuffle = False)for num, value in enumerate(data_loader):

value = value[0]

print(value.shape)

vis.images(value)

break

32개의 데이터가 동시 출력

## 창 닫기

vis.close(env = "main") ## env = main인 모든 창이 닫힘

Line Plot

x값을 넣지 않으면 x 값은 0에서 1까지로 생긴다.

Y_data = torch.randn(6)

plt = vis.line(Y = Y_data) ## x가 없으면 x은 무조건 0에서 1까지X_data = torch.Tensor([1,2,3,4,5,6])

plt = vis.line(Y=Y_data, X=X_data)

Line update

기존에 있는 창에 새 점을 연결하는 것

Y_append = torch.randn(1)

X_append = torch.Tensor([7])

vis.line(Y=Y_append, X=X_append, win=plt, update='append')

multiple Line on single windows

num = torch.Tensor(list(range(0,10)))

print(num.shape)

num = num.view(-1,1)

print(num.shape)

num = torch.cat((num,num),dim=1)

print(num.shape)

plt = vis.line(Y=torch.randn(10,2), X = num)

>>>

torch.Size([10])

torch.Size([10, 1])

torch.Size([10, 2])

Line info

## title : 그래프 이름쓰기

plt = vis.line(Y=Y_data, X=X_data, opts = dict(title='Test', showlegend=True))## legend : 선 정보 입력

plt = vis.line(Y=Y_data,

X=X_data,

opts = dict(title='Test', ## 제목

legend = ['1번'], ## legend

showlegend=True)

)plt = vis.line(Y=torch.randn(10,2),

X = num,

opts=dict(title='Test',

legend=['1번','2번'],

showlegend=True)

)

make function for update line

## loss function 그래프 그리기 위해

def loss_tracker(loss_plot, loss_value, num):

'''num, loss_value, are Tensor'''

vis.line(X=num,

Y=loss_value,

win = loss_plot,

update='append'

)plt = vis.line(Y=torch.Tensor(1).zero_())

for i in range(500):

loss = torch.randn(1) + i

loss_tracker(plt, loss, torch.Tensor([i]))## 창 닫기

vis.close(env = "main")

MNIST-CNN with visdom

import torch

import torch.nn as nn

import torchvision.datasets as dsets

import torchvision.transforms as transforms

import torch.nn.initimport visdom

vis = visdom.Visdom()

vis.close(env="main")def loss_tracker(loss_plot, loss_value, num):

'''num, loss_value, are Tensor'''

vis.line(X=num,

Y=loss_value,

win = loss_plot,

update='append'

)device = 'cuda' if torch.cuda.is_available() else 'cpu'

torch.manual_seed(777)

if device =='cuda':

torch.cuda.manual_seed_all(777)#parameters

learning_rate = 0.001

training_epochs = 15

batch_size = 32#MNIST dataset

mnist_train = dsets.MNIST(root='MNIST_data/',

train = True,

transform=transforms.ToTensor(),

download=True)

mnist_test = dsets.MNIST(root='MNIST_data/',

train=False,

transform = transforms.ToTensor(),

download=True)data_loader = torch.utils.data.DataLoader(dataset=mnist_train,

batch_size = batch_size,

shuffle =True,

drop_last=True)class CNN(nn.Module):

def __init__(self):

super(CNN, self).__init__()

self.layer1 = nn.Sequential(

nn.Conv2d(1,32,kernel_size=3, stride=1, padding=1),

nn.ReLU(),

nn.MaxPool2d(2)

)

self.layer2 = nn.Sequential(

nn.Conv2d(32,64, kernel_size=3, stride=1, padding=1),

nn.ReLU(),

nn.MaxPool2d(2)

)

self.layer3 = nn.Sequential(

nn.Conv2d(64,128, kernel_size=3, stride=1, padding=1),

nn.ReLU(),

nn.MaxPool2d(2)

)

self.fc1 = nn.Linear(3*3*128, 625)

self.relu = nn.ReLU()

self.fc2 = nn.Linear(625, 10, bias =True)

torch.nn.init.xavier_uniform_(self.fc1.weight)

torch.nn.init.xavier_uniform_(self.fc2.weight)

def forward(self, x):

out = self.layer1(x)

out = self.layer2(out)

out = self.layer3(out)

out = out.view(out.size(0), -1)

out = self.fc1(out)

out = self.relu(out)

out = self.fc2(out)

return outmodel = CNN().to(device)

value = (torch.Tensor(1,1,28,28)).to(device)

print( (model(value)).shape )criterion = nn.CrossEntropyLoss().to(device)

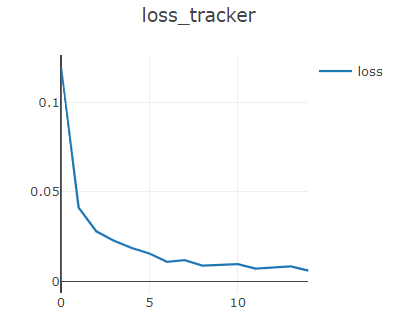

optimizer = torch.optim.Adam(model.parameters(), lr = learning_rate)loss_plt = vis.line(Y=torch.Tensor(1).zero_(),

opts=dict(title='loss_tracker',

legend=['loss'],

showlegend=True)

)Train with loss_tracker

#training

total_batch = len(data_loader)

for epoch in range(training_epochs):

avg_cost = 0

for X, Y in data_loader:

X = X.to(device)

Y = Y.to(device)

optimizer.zero_grad()

hypothesis = model(X)

cost = criterion(hypothesis, Y)

cost.backward()

optimizer.step()

avg_cost += cost / total_batch

print('[Epoch:{}] cost = {}'.format(epoch+1, avg_cost))

loss_tracker(loss_plt, torch.Tensor([avg_cost]), torch.Tensor([epoch]))

print('Learning Finished!')

>>>

[Epoch:1] cost = 0.1199251338839531

[Epoch:2] cost = 0.04109795391559601

[Epoch:3] cost = 0.027864443138241768

[Epoch:4] cost = 0.022573506459593773

[Epoch:5] cost = 0.018547339364886284

[Epoch:6] cost = 0.015445930883288383

[Epoch:7] cost = 0.010787862353026867

[Epoch:8] cost = 0.011658226139843464

[Epoch:9] cost = 0.008654269389808178

[Epoch:10] cost = 0.009108899161219597

[Epoch:11] cost = 0.009518935345113277

[Epoch:12] cost = 0.0069547598250210285

[Epoch:13] cost = 0.007706377189606428

[Epoch:14] cost = 0.00818073283880949

[Epoch:15] cost = 0.005866056773811579

Learning Finished!

with torch.no_grad():

X_test = mnist_test.test_data.view(len(mnist_test), 1, 28, 28).float().to(device)

Y_test = mnist_test.test_labels.to(device)

prediction = model(X_test)

correct_prediction = torch.argmax(prediction, 1) == Y_test

accuracy = correct_prediction.float().mean()

print('Accuracy:', accuracy.item())

>>>

Accuracy: 0.9635999798774719반응형

'AI Study > DL_Basic' 카테고리의 다른 글

| [파이토치로 시작하는 딥러닝 기초]10.4_Advance CNN(VGG) (0) | 2021.01.07 |

|---|---|

| [파이토치로 시작하는 딥러닝 기초]10.3 ImageFolder / 모델 저장 / 모델 불러오기 (0) | 2021.01.04 |

| [파이토치로 시작하는 딥러닝 기초]10.1_Convolutional Neural Network (0) | 2020.12.30 |

| [파이토치로 시작하는 딥러닝 기초]07_MLE, Overfitting, Regularization, Learning Rate (0) | 2020.12.28 |

| [파이토치로 시작하는 딥러닝 기초]06_Softmax Classification (0) | 2020.12.28 |